As part of Crastina’s current theme, Science & Sound, we have set out to find examples of scientists using sound to communicate their work. But not in terms of using the lyrics of a song to talk about science; we were interested in the use of sound to perceptualize, interpret or convey real data. Dr. Mark Temple, an academic working at Western Sydney University, is one such scientist. Having read more about his work, I have become fascinated by DNA music and, as such, I reached out to him to learn more.

Hello Dr Temple, thank you for agreeing to answer our questions. Could you tell us more about how and why you started translating DNA sequences into sounds?

I was a professional musician before I went on to complete a PhD in molecular biology. For many years, I kept these interests separate since I saw nothing in common between the two. I later became aware of the concept of sonification which I take to mean ‘using audio to reveal the properties of some phenomena’. Since I am particularly fascinated by the structure and function of DNA, I thought it would be interesting to use DNA sequence information to generate audio. The key to this project was to establish if the audio could reveal something about the DNA sequence that was not obvious by visual inspection alone.

About three years ago, I published a study on using sound for DNA sequence analysis. DNA is a long string-like molecule made up of repeating G, A, T and C bases which I convert to a long sequence of musical notes. This had been done previously by others but the novelty of my approach was that the resulting audio reflects both the raw DNA sequence and importantly its functional motifs. The study demonstrated that the audio alone could be used to distinguish a gene sequence from repetitive DNA, mutations could be detected in telomere sequences, and surprisingly non-coding DNA sequences were unique sounding. These differences could be detected because the audio was designed in the context of what was known about the function of common DNA motifs. This approach did not consider the metadata referring to specific gene information or polymorphisms, only the raw sequence data was processed.

What do you think are the benefits of presenting data as sounds as opposed to other communication media? Does the hearing sense offer some extra capabilities in terms of data analysis?

Clearly our minds are able to process sound and vision simultaneously and our intelligence is able to place these into a context that becomes meaningful. There are many great websites that display DNA sequence information and present the annotated sequence using interactive graphics, colours, text labels and such but none of them use audio to complement the visual displays, which I think is a major oversight (sic). In the real world, if I flick a switch, I can hear it click; if the DNA sequence triggers a biological process, I’d like to hear that click also. If the DNA contains a sequence that is known to trigger the start of gene expression (to make a protein) then it is important to hear that trigger!

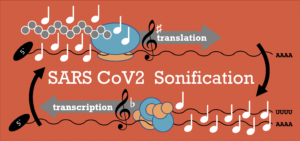

I think the multi-layered nature of audio is particularly useful when looking at overlapping sequence information. With audio I can hear and perceive a conversation whilst listening to music and also hear a knock on the door as these events happen in real time; the audio occurs along a strict timeline and is layered. The ability to layer sound with different events to build up a more complex listening experience is particularly advantageous. In my recent sonification studies of the coronavirus I made the audio more complex by using up to ten layers of audio, each of these representing a different attribute. Additionally, one audio layer may be influenced by a trigger that occurred in the past whereas another audio layer may reflect the current attribute being processed.

That brings us nicely to my next question about your work on the coronavirus genome – what new information did you gather there?

I thought the coronavirus genome was a good sequence to sonify because it has been well studied since variants of this virus are responsible for SARS, MERS and the current COVID-19 pandemic. Over the years, the genome of coronavirus has been annotated with metadata that clearly indicates where a gene sequence begins, which regions are untranslated, which regions contain binding sites, and other structural information. It was a good challenge to sonify these metadata in addition to sonifying the raw DNA sequence itself. This approach produces more complex audio compared to my prior work. An article of mine on sonification of the coronavirus genome was recently published in BMC Bioinformatics titled ‘Real-time audio and visual display of the Coronavirus genome’. I also generated a visual display that is synchronised to the audio to create a context to represent what is actually being sonified and to highlight that the two approaches are complementary.

DNA and RNA sequences can be processed by cells in three possible ways, which we refer to as reading frames. These act as the punctuation and grammar used to organise the G, A, T and C bases into biological words so that they can be read. If you read these words in the correct frame, you often find long open chapters of information (genes). These chapters always begin with start sequences and end with stop sequences, which my methods detect as well as other important sequences. My sonification of the viral genome picked-up all of the known chapters of information, but I was surprised to note that within these chapters (in the unread or ‘incorrect’ frames) there were also long stretches of information which can be thought of as alternative paragraphs of information. The sonification highlights what had already been suggested by other scientists: that alternative proteins or functional sequences of RNA could be made from these, and they may play a role in the biology of the virus. In fact, the virus lifecycle relies upon a shift between reading frames in the processing of the polyprotein and my sonification highlights other regions in the genome where alternative products are theoretically possible if other frameshifts occur.

Your work is clearly popular with non-specialists; did you also present these data as audio to other specialists in your field? How was it received?

When I first presented my sonification studies to my peers, I was met by either enthusiasm or scepticism. There didn’t seem to be much middle ground, which was surprising to me. I was probably overly critical of myself initially, thinking ‘what is a molecular biologist doing making music out of DNA?’. However, once I convinced myself that the technique was informative, I submitted the manuscript for peer review. The reviewers were sceptical to a point but the editor was really positive. I think it’s difficult to persuade people who do not think deeply about audio or music to instantly see the value in sonification. To be fair, it is not easy to associate the audio with the DNA or RNA sequence that is being represented and it takes time to learn to hear things in a new audio language. Nevertheless, I have presented the sonification work at scientific conferences and had great conversations with other bioinformaticians about its applications. Many confess to have had similar ideas but they were constrained by the strict tenure of post-doctoral positions; I am not constrained by a grant and therefore had more freedom to pursue these ideas.

After the publication of the work, I was also contacted by some high-profile science blogs and people outside of the science profession began to engage in the ideas on social media, which I thought was fascinating. While I had not shared my work on social media before, the popularity of my work on these platforms was really useful to promote it more widely. I received many collaboration requests from doctors, artists, and musicians. For example, a performing arts centre suggested combining a lightning talk with a creative musical performance to play music that incorporated the audio from the sonification algorithms. This project was quite a challenge and made me think more deeply about non-traditional left field generative music. We performed again in the ‘National Science Week’ activities in the following year and have more recently been asked to prepare a performance based on the coronavirus sonification, this is to happen when social distancing allows. I enjoy the challenge to make science interesting to the wider population and I have enjoyed the foray into generative and partially improvised music based on sequence sonification.

Is there still much to discover in the sound of DNA or do you have other systems to explore? What advice would you give other scientists thinking of employing sonification in their own work?

I think there is a lot of scope to sonify biological information in meaningful ways and I think the scientific community is more receptive to the worth of sonification as more peer reviewed literature emerges. I think the challenge is to have a clear focus on which attributes you want to emphasise in the data and highlight these in the audio. In a science context, this needs to be done in a systematic and non-biased way so that it can be clearly distinguished by an informed listener: often much of the data is apparently random to a non-specialist unless it is processed in a way that highlights key features.

Were to find Dr. Mark Temple

- Mark’s website where you can sonify some DNA!

- Mark’s Youtube Channel

- Mark’s study “An auditory display tool for DNA sequence analysis” (2017)

- Mark’s article “What does DNA sound like? Using music to unlock the secrets of genetic code” in The Conversation

- The Hummingbirds – Blush (1988)

- The Hummingbirds – Alimony (1986)

Trackbacks & Pingbacks

-

[…] to wonder if there is any science on the connection between sound and identity. Not in the sense of the music our DNA can play. But rather, do our brains recognise and react differently to sounds we associate with childhood, […]

Leave a Reply

Want to join the discussion?Feel free to contribute!

Leave a Reply

- Turning frustration into change: Jean-Sébastien Caux, founder of SciPost - August 9, 2021

- Dr Nicola Nugent: Publishing Manager at the Royal Society of Chemistry - December 7, 2020

- Public Engagement and Trust in Science: In Conversation with Dr. Farzana Meru - November 23, 2020

- Is the peer review process trustworthy? Perspectives by Dr. Jurado Sánchez - November 4, 2020

- Prof. Maria Baghramian: Policy, Expertise and Trust in Action - October 29, 2020

- Prof. Luke Drury: ‘When Experts Disagree’ - October 5, 2020

- Are we what we hear? A reflection on sound, identity and science communication - September 27, 2020

- Sign your Science - September 22, 2020

- Raven the Science Maven - August 18, 2020

- Dr Mark Temple: DNA Sonification or when Scientist are musicians - August 5, 2020

I would like to get my DNA made into music..how do I do that..